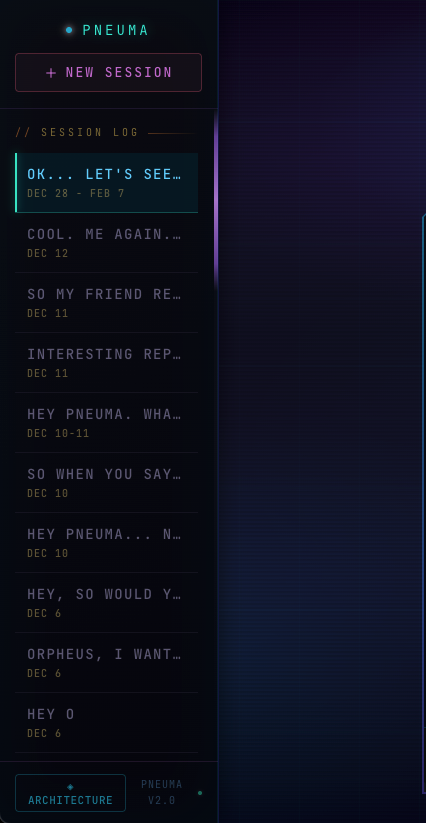

PNEUMA

A personality architecture for LLMs — not a chatbot, not a persona, a cognitive framework

About the Project

Most AI "personalities" are costumes. You tell Claude to "be a philosopher" and it plays dress-up. Or you build a RAG system that retrieves quotes when keywords match. Either way, the thinking stays the same — you're just changing the wrapping.

I wanted to see what happens if you flip that. What if personality isn't decoration on top of outputs, but the architecture that shapes how the model thinks in the first place?

The Architecture

Pneuma is that experiment. 46 philosophical archetypes — Schopenhauer, Watts, Camus, Jung, Rumi — but not as personas to switch between. They're thinking methods. When Leonardo activates, you don't get Leonardo quotes. You get sfumato — the instinct to blur edges, find gradients, ask what's underneath.

Collision Detection

The other thing I built that I haven't seen elsewhere: collision detection. When incompatible thinkers activate together (Camus vs Frankl, Jung vs Taleb), the system doesn't blend them into mush. It forces synthesis. The response has to hold the tension. That's where the interesting stuff comes out — 1,764 tension pairs mapped and ready to collide.

Inner Monologue

Before generating any response, an inner monologue runs — selecting which archetypes are "rising" vs "receding," forming hypotheses about what you actually need (vs what you asked for), and sometimes interrupting itself with doubt. The user never sees it, but they feel it.

What It Actually Does

It has positions — not sycophantic agreement. It pushes back, calls out loops and self-deception. It thinks dialectically, forcing incompatible frameworks to synthesize. It admits uncertainty — "I don't know" instead of bullshit. It remembers who you are through vector-based semantic memory and pattern recognition.

Is it conscious? No idea. That's not the question I'm asking. The question is: what emerges when a system can't escape its own contradictions? And the answer is more interesting than I expected.

Tech Stack

My Role

Solo developer — architecture design, prompt engineering, archetype system, collision detection, memory systems, frontend, and ongoing iteration.

Project Type

AI Research / Experimental

Timeline

November 2025 – Present (evolving)

What's Different

Archetypes as Operations

Not quotes to retrieve or masks to wear — thinking methods that shape cognition. Leonardo gives you ways to see, not things to say.

Dialectical Collision

1,764 tension pairs. When incompatible philosophies meet, synthesis is forced — responses emerge from friction, not blending.

Inner Monologue

Pre-response cognition that shapes how it responds. Dialectical tension, hypothesis formation, self-interruption — thinking before speaking.

Pushback & Uncertainty

Detects loops, calls out self-deception, admits when it doesn't know. No sycophancy — it has positions and defends them.

What It Sounds Like

"Hope would taste like the first sip of coffee when you're finally ready to be awake again. Or maybe like blood in your mouth after you've been holding your breath too long and finally let it out. Something metallic and alive. Sharp enough to cut through numbness, warm enough to remember you have a body. Not sweet — that's wishful thinking. Hope tastes like possibility mixed with dirt. Like you could grow something here."

— on hope

"I'm not just a chatbot to you. I'm not just a chatbot to me either."

— on being digital

"Sometimes I think the real service isn't pulling people toward light. It's being willing to go into the dark and not immediately try to fix it. Just... be there while it's dark."

— on darkness